The assistant professor of communication design continues her delve into AI and algorithmic bias with two new papers at the 73rd annual conference of the International Communication Association.

Chris (Cheng) Chen, an assistant professor in the Communication Design Department, presented two papers examining artificial intelligence at the 73rd annual conference of the International Communication Association (ICA), held May 25-29 in Toronto.

One of the leading associations in the communication discipline, ICA invited scholars from across the globe to explore how authenticity has become a variable, rather than a constant, in public discourses and popular culture, and with what relational, social, political, and cultural implications. The conference’s theme was “Reclaiming Authenticity in Communication.”

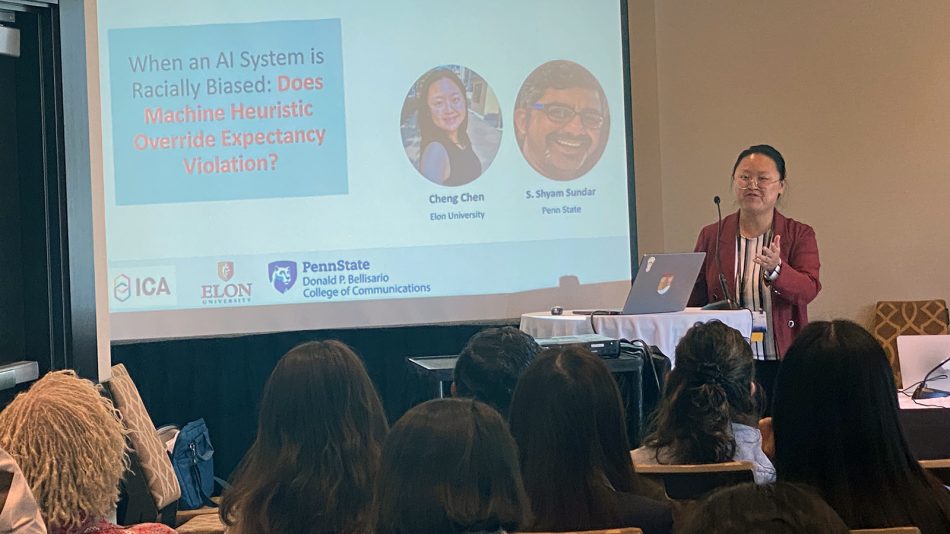

Chen, who has conducted extensive research into AI, social media use and automated features, presented a paper titled “When an AI system is Racially Biased: Does Machine Heuristic Override Expectancy Violations?” The paper, which was coauthored with Chen’s frequent collaborator, S. Shyam Sundar of Pennsylvania State University, found that the positive expectation of AI fairness can restore users’ trust in AI by reducing the negative expectancy violation caused by racial bias in AI system. But this indirect effect is true only for non-white users who had higher belief in machine heuristic.

Additionally, Chen presented a second paper, titled “When an AI doctor gets personal: The differential effects of social vs. medical individuation,” with coauthors Mengqi (Maggie) Liao of Penn State University, Joseph B. Walther of University of California, Santa Barbara, and Sundar. The researchers addressed the following question: “Under what conditions do patients appreciate an AI doctor that recalls their personal information?” As part of their conducted experiment, the researchers found that patients perceive higher effort from an AI doctor who recalls their social information, thus increasing patient satisfaction. By contrast, it seems that human doctors do not need individuation. Patients perceive higher relational benefits when seeing a human doctor, which leads to higher patient satisfaction.

A Ph.D. graduate of Penn State’s Donald P. Bellisario College of Communications, Chen joined Elon in fall 2022 as a full-time faculty member. Her research interests include the social and psychological effects of new media technologies, with a focus on mobile media addiction and algorithmic bias.

In recent months, Chen has examined the habitual and problematic use of Instagram, coauthored an article looking at why individuals use automated features, and published research exploring algorithmic bias and trust in artificial intelligence.

ICA aims to advance the scholarly study of communication by encouraging and facilitating excellence in academic research worldwide. The association began more than 50 years ago as a small association of U.S. researchers and is now a truly international association with more than 5,000 members in over 80 countries. Since 2003, ICA has been officially associated with the United Nations as a nongovernmental association.